We first wrote about the AI Act more than one year ago.

Since then, things have changed several times, and now the moment has come.

The EU Council gave the final green light to the groundbreaking AI Act – the world's first comprehensive set of rules for AI.

In this blog post, we will dive into the different categorisations of AI systems according to the final text of the AI Act.

Are you ready to jump into it?

PS: As the regulation is really long and complex, we will publish other content specific to each class.

When will it be applicable?

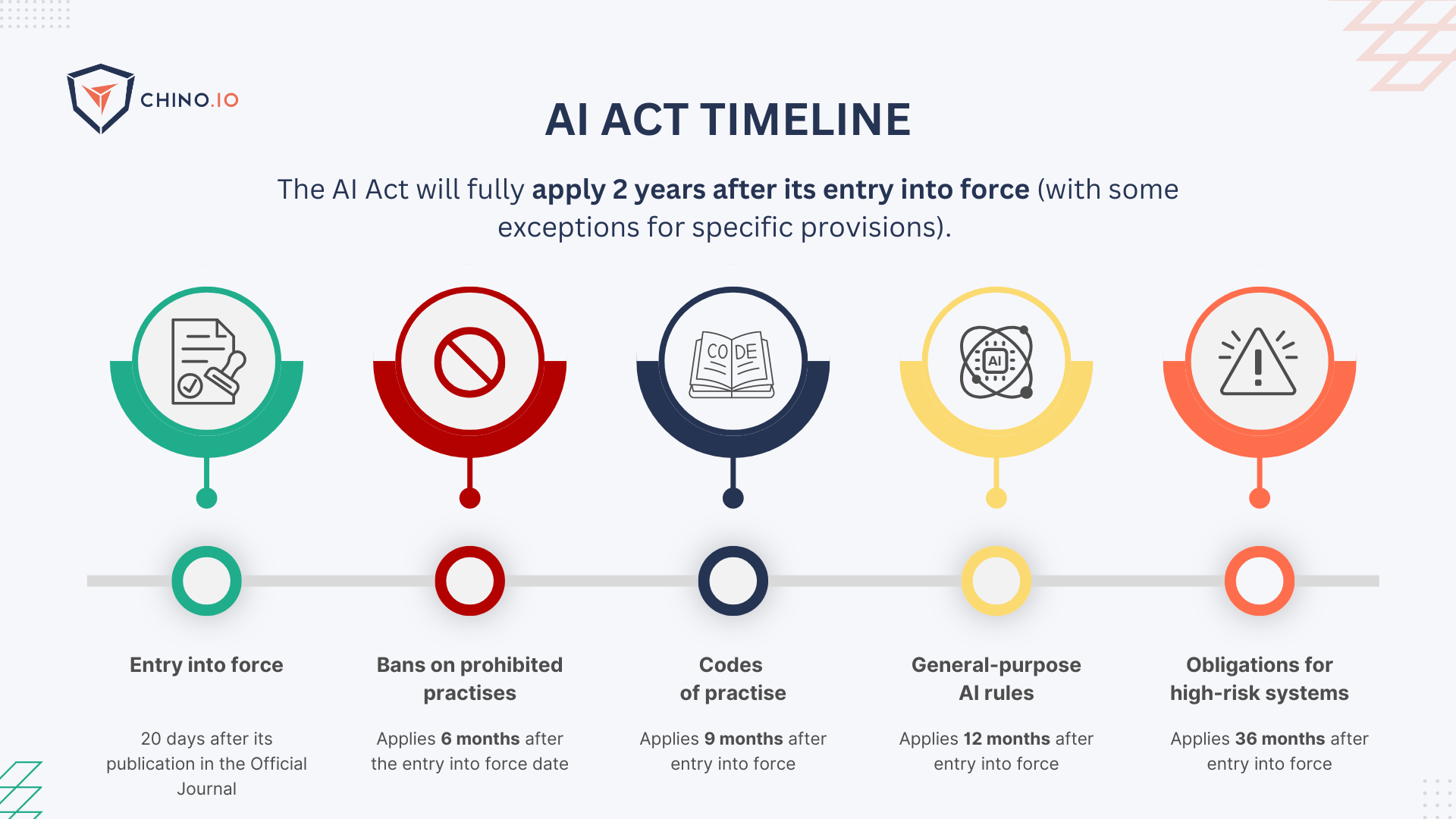

After being signed, the Act will be published in the EU’s Official Journal and enter into force 20 days after this publication.

The AI Act will fully apply two years after it enters into force (with some exceptions for specific provisions).

What is an AI System?

The EU Commission set the stage for the AI Act by defining an AI system. Tech and market trends are always changing, and they want a super flexible and future-ready definition.

The legal framework's definition of an AI system aims to be as technology-neutral and future-proof as possible, considering the fast technological and market developments related to AI.

A machine-based system designed to operate with varying levels of autonomy that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

So, an AI system is basically any machine-based setup that can work on its own to some degree. It can adapt after it’s been launched, and it figures out how to produce outputs like predictions, content, recommendations, or decisions based on the data it gets. These outputs can then impact both the digital and physical world.

AI systems are a step above your basic software or programmed tools. They stand out because of their ability to infer and learn. But don’t worry, if it's just a system running on fixed rules set by humans, it doesn’t count as AI under this definition. The magic sauce here is the system's ability to infer.

If you want to know more about the definition, make sure to check our first article!

The actors defined by the AI Act

The AI Act identifies key actors essential to developing, deploying, and regulating AI systems.

- Provider: who develops any AI system and sells it or makes it available under their name or brand, whether for money or for free.

- Deployer: who uses an AI system within their organisation, except when the AI system is used for personal, non-professional activities.

- Importer: a person (or company) based in the EU that brings an AI system from outside the EU to sell or distribute it under the name or brand of the original creator.

- Distributor: a person (or company) in the supply chain, other than the provider or importer, that makes an AI system available in the EU market.

AI Systems excluded from the AI Act

Before digging into the classification, requirements, and obligations, let’s look at the few types of AI excluded from it. If you are one of these, you are lucky, and you can skip the whole article 😉

- Any research, testing, or development of AI systems before they are sold or used must comply with applicable EU laws. However, this exclusion does not apply to testing in real-world conditions.

- AI systems used only for military, defense, or national security purposes are excluded, regardless of who conducts these activities.

- AI systems or models created exclusively for scientific research and development are also excluded.

- AI systems released under free and open-source licenses are generally exempt unless marketed or used as high-risk AI systems or those falling under Articles 5 or 50.

For example, even open-source AI systems are subject to the AI Act if they involve monetisation or are classified as high-risk.

The Final AI Classification

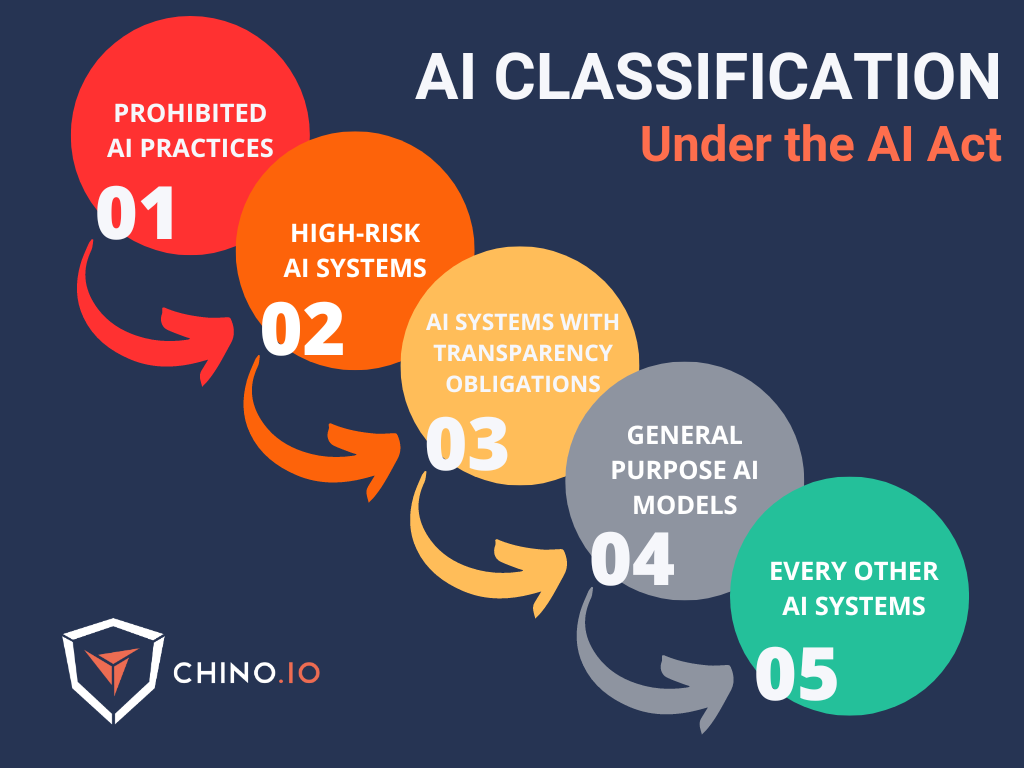

The AI Act classifies AI systems into 5 categories (all with different definitions and thus, different obligations needed).

In this blog post, we will focus on the first four classes, as they may have consequences for businesses developing AI.

For each one of these categories, you will need to comply with different requirements and obligations!

Prohibited AI Practices

This is the first and most feared class: the AI systems considered prohibited in the EU. (For those who love legal terms, this is Article 5, which lists all the prohibited AI practices.)

Please note: this means that if your AI systems fall into this category, you are not allowed to market and sell your service!

According to the Act, you may be in stormy waters if:

- Using subliminal or manipulative techniques to influence people's behaviour without their awareness is prohibited.

- Exploiting vulnerabilities due to age, disability, or specific social or economic situations to manipulate behaviour is prohibited.

- Biometric categorisation systems that infer race, political opinions, trade union membership, religious beliefs, or sexual orientation from biometric data are forbidden.

- Evaluating or classifying people based on social behaviour or personal characteristics, leading to unfair treatment in unrelated social contexts, is prohibited.

- The use of real-time remote biometric identification systems in public spaces for law enforcement is restricted. The following cases (for specific and necessary purposes) are excluded:

- Making risk assessments based solely on profiling or personality traits to predict criminal behaviour is not allowed.

- Creating or expanding facial recognition databases by scraping images from the internet or CCTV footage without targeting specific individuals is forbidden.

- Inferring emotions in the workplace or educational institutions is prohibited, except for AI systems used for medical purposes.

While medical devices for emotion recognition are permitted, AI systems for emotion recognition and well-being in the workplace may be banned, affecting many HR and workplace wellness companies.

High-Risk AI systems

In this category may fall a great part of the digital health AI system in the market for the B2B and B2C scenario.

Digital health startups and SMEs: get ready to do some extra homework.

Many existing AI solutions used in healthcare are integrated into medical devices regulated under the MDR. Most of them will qualify as high-risk AI systems under the AI Act.

👉 What does this mean? Digital Health companies must ensure compliance with both MDR and the corresponding requirements of the AI Act. It will apply to any DTx, Decision Support Systems, and any other software as a Medical Device that uses AI.

And/or fall into one of these areas:

- Remote biometrics: often used to control access to secure areas (such as airports or banks).

- Critical infrastructure: systems that directly affect the public's health, safety, and welfare and whose failure could cause catastrophic loss of life, assets, or privacy

- Educational training: for personalised education and interactive content.

- Employability and worker management: systems that help companies to increase operational efficiency, enable faster-informed decisions, etc.

- Access to and enjoyment of essential private and public services: used by public authorities for assessing eligibility for benefits and services (including their allocation, reduction, revocation, or recovery)

- Law enforcement: to anticipate and prevent crimes (by crawling and analysing historical data)

- Migration and border control: to assess individuals for risks to public security, irregular migration, and public health, and also to predict people's behaviour.

- Administration of justice: to support public administration on this topic.

High-risk AI systems (and certain users of a high-risk AI system that are public entities) will need to be registered in the EU database for high-risk AI systems.

Users of an emotion recognition system will have to inform natural persons when they are being exposed to such a system.

Requirements for high-risk AI systems

Being “labelled” as a High-Risk AI System means that you have requirements to fulfil to be able to sell your AI.

- Risk Management System: An ongoing process that evaluates risks, identifies measures, and tests throughout the system's entire lifecycle (Art. 9).

- Data Governance: Training, validating, and testing data sets must follow proper data governance practices, ensuring quality, quantity, and review for potential bias (Art. 10).

- Technical Documentation: Prepare technical documentation proving the system complies with the Regulation, as specified in Annex IV.

- Record-Keeping: High-risk AI systems must automatically record events throughout their lifetime (Art. 12).

- Transparency and Information for Deployers: The system must be transparent enough for deployers to correctly understand and use its output. Detailed instructions for use and maintenance must be provided (Art. 13).

- Human Oversight: A human must supervise the system, be able to decide on its use and stop it if necessary ("human law by default") (Art. 14).

- Accuracy, Robustness, and Cybersecurity: The system must ensure high accuracy, robustness, and cybersecurity (Art. 15).

For the following high-risk AI applications, providers must follow an internal control conformity assessment (without a notified body).

We will write about this conformity assessment in another blog, so make sure you stay updated with the latest news!

Specific AI Systems with transparency obligations

In the context of the AI Act, transparency means the ability to understand how AIs make decisions.

This includes knowing what data they're trained on, how they process it, and the rationale behind the outputs.

- Interacting with People: If your AI system interacts directly with people, make sure it informs them they are interacting with an AI.

- Synthetic Content: If your AI generates synthetic audio, images, video, or text, ensure the outputs are marked in a machine-readable format and clearly identifiable as artificially generated or manipulated.

- Technical Solutions: Ensure your AI solutions are effective, interoperable, robust, and reliable, considering content types, implementation costs, and current technical standards.

- Emotion and Biometric Recognition: If your AI uses emotion recognition or biometric categorisation, inform people exposed to it about its operation.

- Deep Fakes and Public Interest Content: If your AI creates or manipulates content like deep fakes or generates text disclosing public interest information, disclose that the content is artificially generated or manipulated.

- Clear Information: Provide the necessary information to people clearly and during their first interaction or exposure to the AI system.

General Purpose AI Model (GPAI)

AI Models that display significant generality and are capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market, and that can be integrated into a variety of downstream systems or application. GPAI models with systemic risks:

- The model has high-impact capabilities, with the impact being presumed when the cumulative amount of computation used for its training, measured in floating point operations per second (FLOPs), is greater than 10^25.

- Having capabilities or an impact equivalent to those of high-impact models about the criteria set out in Annex XIII.

Every other AI systems

Simply, each of the AI systems that do not belong in other classes is included in this class.

Still, if you are in this category, you will need to satisfy some obligations such as the so-called AI Literacy.

Here, you need to document your team's skills and knowledge about developing AI. The goal is to allow providers, deployers, and affected persons, taking into account their respective rights and obligations, to make informed deployments of AI systems.

Since detailed information about this is not yet available, we suggest you document clearly and transparently all the steps needed to develop your AI (with a special focus on training).

Penalties

What about not complying with the AI Act? Well, as we have seen for the GDPR, fines are the main way for Authorities to fix things.

Fines for infringements of the AI Act are set as a percentage of the company’s global annual turnover in the previous financial year (or a predetermined amount, whichever is higher).

SMEs and start-ups will be subject to proportional administrative fines.

Looking at how to be GDPR compliant? Chino.io, your trusted compliance partner

Working with experts can reduce time to market and technical debt and ensure a clear roadmap that you can showcase to partners and investors.

At Chino.io, we have been combining our technological and legal expertise to help hundreds of companies like yours navigate regulatory frameworks and regulations, enabling successful launches and reimbursement approvals.

We offer tailored solutions to support you in meeting the GDPR, HIPAA, AI ACT, DVG, or DTAC mandated for listing your product as DTx or DiGA.

Want to know how we can help you? Reach out to us and learn more.