What to expect this year

Note: This is the second part of our series on A.I. Read the first part here!

The EU is preparing the world’s first proposal for regulating AI. As happened for GDPR, this new regulation will likely become a global standard and a blueprint for other nations outside the EU.

The EU is sensible for this type of topic and is setting up initiatives and frameworks that will help researchers and innovative companies to develop better solutions with embedded AI.

Towards a regulated system: the EU draft regulation on AI

The AI Act is a proposed European law on Artificial Intelligence and will become the first set of rules in the world to regulate AI.

The regulations will apply to any AI system within the European Union: as we can imagine, it will have tremendous impacts and implications for all innovative organizations using AI around the world.

It will apply to providers, users, importers, and distributors of AI systems and also to non-EU companies that supply AI systems in the EU.

As we will see later, this draft regulation adopts a risk-based approach: some AI uses will be prohibited, others will have to stick to onerous requirements (also known as HRAIS - High-Risk AI Systems), and many will be free to be used.

The draft act contains obligations that apply to AI systems from the design stage. Startups and companies should start to think and prepare now for this regulation in order to be ready to conquer the market when it comes into force.

A 2020 research carried out by McKinsey highlights that only 48% of the companies working on AI reported recognize the issues of regulatory-compliance risks, and only 28% reported actively working to address them.

Although not yet in force, the set of rules provides a clear vision of the future of AI regulation as a whole. Now, it is the time to begin understanding those implications and prepare actions to mitigate potential risks that can emerge.

What is an AI system?

The EU put the baseline of this regulation by defining what an AI system is.

The definition of an Artificial Intelligence system in the legal framework aims to be as technology-neutral and future-proof as possible, considering the fast technological and market developments related to AI. According to the draft regulation:

"The definition should be based on the key functional characteristics of the software, in particular the ability, for a given set of human-defined objectives, to generate outputs such as content, predictions, recommendations, or decisions which influence the environment with which the system interacts, be it in a physical or digital dimension.

AI systems can be designed to operate with varying levels of autonomy and be used on a stand-alone basis or as a component of a product, irrespective of whether the system is physically integrated into the product (embedded) or serve the functionality of the product without being integrated therein (non-embedded)."

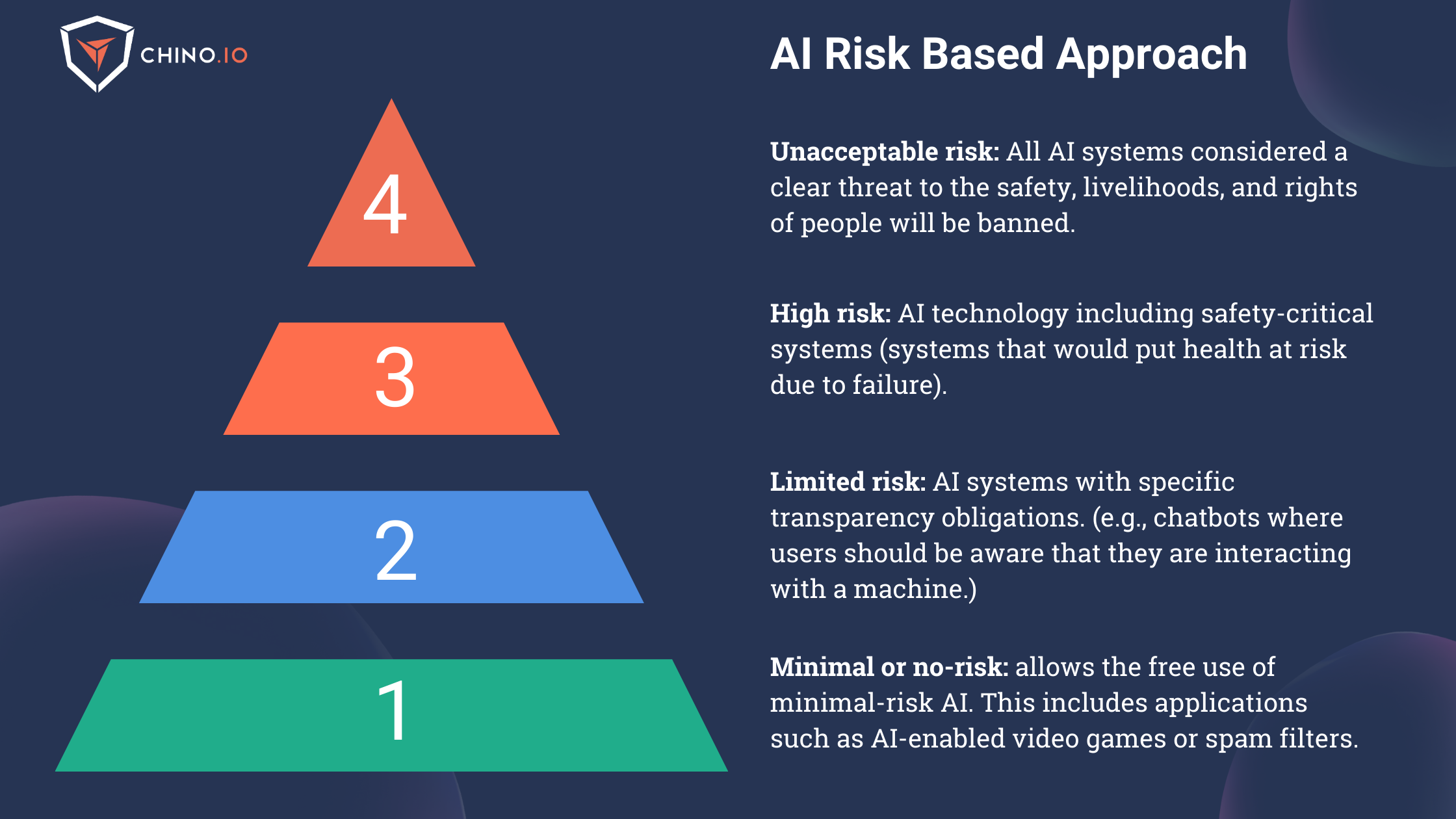

A pyramidal risk-based approach

The main goal of this Act is to define the risks for all AI solutions operating in the EU. To do that, it applies a risk-based approach to categorize AI systems as listed below:

1️⃣ Unacceptable-risk AI systems: “All AI systems considered a clear threat to the safety, livelihoods, and rights of people will be banned, from social scoring by governments to toys using voice assistance that encourages dangerous behavior.”

2️⃣ High-risk AI systems: All remote biometric identification systems are considered high-risk and subject to strict requirements. The use of remote biometric identification in publicly accessible spaces for law enforcement purposes is prohibited. This category will be subject to the most extensive set of requirements that include human oversight, cybersecurity, transparency, risk management, etc. Inside this category will fall the greatest part of AI systems used in healthcare. High-risk AI systems shall be designed and developed with capabilities enabling automatic logs while the high-risk AI system is operating.

3️⃣ Limited-risk AI systems: Limited risk refers to AI systems with specific transparency obligations. When using AI systems such as chatbots, users should be aware that they are interacting with a machine to make an informed decision to continue or step back.

4️⃣ Minimal or no-risk AI systems: The proposal allows the unrestricted use of minimal-risk AI. This includes applications such as AI-enabled video games or spam filters. The vast majority of AI systems currently used in the EU fall into this category.

The effect on digital health companies

It is undeniable that the AI draft regulation will have direct effects on med-tech and digital health companies. There may be misalignments between the MDR and the current AI draft regulation.

❌ Most AI-based medical device software would likely be classified as high-risk AI systems: Article 6 of the AI Act - coupled with Annex II (paragraph 11) - explains that all medical devices subject to a conformity assessment procedure by a Notified Body must be classified as high-risk AI systems.

❌ The AI Act establishes certification requirements that differ substantially from the procedures under MDR/IVDR - see Chapter 4, Articles 30 and subsequent.

❌ It claims a post-market control to be carried out by two - and most of the times unconnected - supervisory authorities for medical devices relating to AI systems.

If you are interested in this topic, here is a valuable resource about the harmonisation between the AI Act and MDR.

These considerations highlight two main points for all those companies that implement a proprietary AI in their software:

➡️ The Act may change to include adjustments and thus be more aligned with the existing MDR.

➡️ You should start thinking about implementing processes in order to be ready when the Act becomes a reality.

On this last point, we have some tips and suggestions for you.

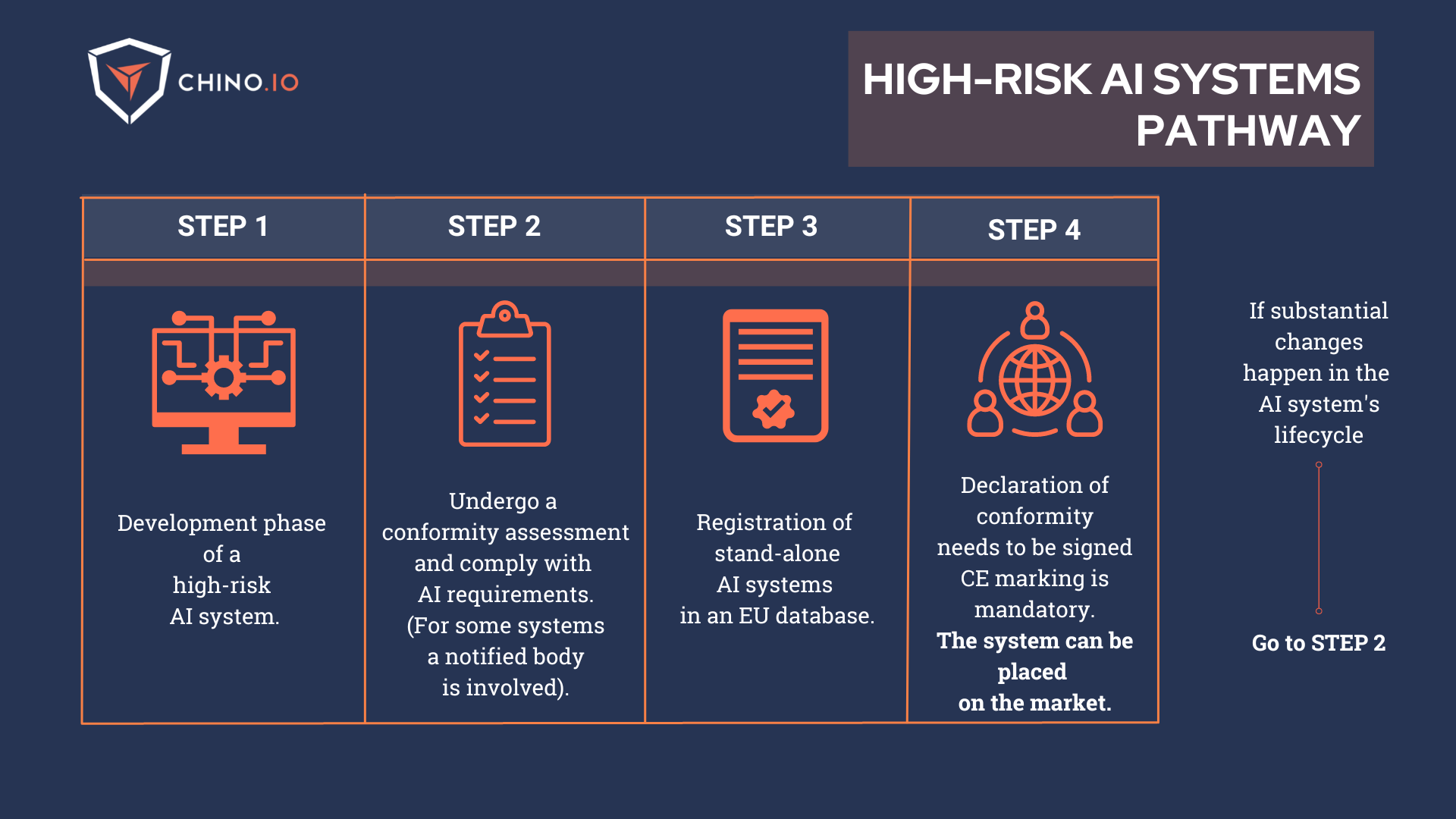

So, you have a high-risk system: what does it mean to you?

As most medical device software placed on the market as a stand-alone product or component of a hardware medical device would be classified as High-risk AI systems, they will be subject to strict requirements before their access to the market. Let’s go through some of them:

➡️ Perform adequate risk assessment and mitigation systems;

➡️ Implement logging of activity to ensure traceability of results;

➡️ Provide clear and adequate information to the user;

➡️ Implement strong and reliable human oversight measures to minimize risk;

To prove your compliance, you will need to undergo the Conformity Assessment.

Worried about it? Don’t worry. Let’s dive into the requirements you will need to satisfy.

Conformity Assessment under the AI Act?

The Conformity Assessment is your way to provide accountability for a high-risk AI system and stands as a legal obligation.

This document is the “process of verifying whether the requirements set out in Title III, Chapter 2 of this Regulation relating to an AI system have been fulfilled”.

Let’s go through the requirements that must be proved:

1️⃣ The quality of data sets used to train, validate, and test the AI systems; the data sets have to be "relevant, representative, free of errors and complete" and have "the appropriate statistical properties."

2️⃣ Technical documentation with all information necessary on the system and its purpose for authorities to assess compliance;.

3️⃣ Ensure record-keeping in the form of automatic recording of events.

4️⃣ Guarantee transparency and the provision of information to users.

5️⃣ Accurate level of human oversight.

6️⃣ Ensure a high level of robustness, accuracy, and cybersecurity.

Keep in mind that all these requirements must be taken into account from the early stage of development of the high-risk AI system (except for the technical documentation that should be drawn up by the provider)

The Conformity Assessment must be completed before placing the AI system in the EU market.

It can be conducted both internally or by an external notified body. But what’s the difference?

📍 Internal Conformity Assessment: the provider (or the distributor/importer/other third parties) must:

- Verify that the established quality management system is in compliance with the requirements depicted in Article 17.

- Examine the information in the technical documentation to assess whether the requirements are met.

- Check and ensure that the design and development process of the high-risk AI system and its post-market monitoring is consistent with the technical documentation.

📍 External Conformity Assessment: the notified body assesses the quality management system and the technical documentation according to the process explained in Annex VII.

3 simple steps to do now (and not waste time and money tomorrow)

This draft represents only the first step toward a common international effort to manage the risks associated with the development of Artificial Intelligence.

Although no measures have been put in place yet, this is the right time to start thinking about creating processes to mitigate risks and ensure compliance.

The sooner digital health companies adapt to this new regulatory reality, the better their long-term success in the market will be.

Moreover, focus on considering these regulatory requirements from the very early stage of your AI development program.

If you have already started your product journey, the best way of facing this new regulation today is to leverage your work on GDPR since there are quite a few areas in which they are interrelated:

✅ Perform an inventory of all AI systems: if you have a Record of Processing Activities under GDPR, this should be already done.

✅ Implement proper logging in your system: Logging will be a critical aspect of AI applications. The whole article 12 describes the requirements in depth. When defining how logging should work, keep in mind that GDPR principles continue to apply! Particularly data minimization and traceability. You can check our article to know more here: https://blog.chino.io/logs-audit-trails-digital-health-apps/

✅ Be ready to demonstrate GDPR compliance: since article 10 of the AIA is all about data governance. In particular, digital health applications are most likely processing sensitive data. Article 10 of the AIA allows for this type of data processing as long as “subject to appropriate safeguards for the fundamental rights and freedoms of natural persons, including technical limitations on the re-use and use of state-of-the-art security and privacy-preserving measures, such as pseudonymization, or encryption where anonymization may significantly affect the purpose pursued.” These are the GDPR requirements.

In conclusion, this draft EU AI regulation should be seen as a reminder for companies to ensure they have a solid set of processes to manage AI risk and comply with present and future regulations.

To deliver proper innovation in this field, it is essential to focus efforts on creating frameworks for risk management and compliance to enable continuous improvement and deployment of Artificial Intelligence safely and quickly.

Chino.io, your trusted compliance partner

Working with experts can reduce time-to-market and technical debt and ensure a clear roadmap you can showcase to partners and investors (see our latest case study with Embie).

At Chino.io, we have been combining our technological and legal expertise to help hundreds of companies like yours navigate through EU and US regulatory frameworks, enabling successful launches and reimbursement approvals.

We offer tailored solutions to support you in meeting the GDPR, HIPAA, DVG, or DTAC mandated for listing your product as DTx.

Want to know how we can help you? Reach out to us and learn more.