We first wrote about the AI Act back in January 2023.

Since then, some things have been updated to achieve a more comprehensive piece of legislation.

These rules would ensure that AI developed and used in Europe fully aligns with EU rights, including safety, privacy, transparency, non-discrimination, and social and environmental well-being.

TLTR: The AI Act is approved but not in force yet. On 14 June 2023, the Members of the EU Parliament adopted Parliament's negotiating position on the AI Act.

The talks have now begun with EU countries in the Council on the law's final form.

The goal is to reach an agreement by the end of this year.

One step back: What is the AI Act?

The AI Act is a proposed European law on Artificial Intelligence and will become the first set of rules in the world to regulate AI.

The Act contains obligations that apply to AI systems from the design stage. Startups and companies should start thinking and preparing for this regulation to be ready to conquer the market when it comes into force.

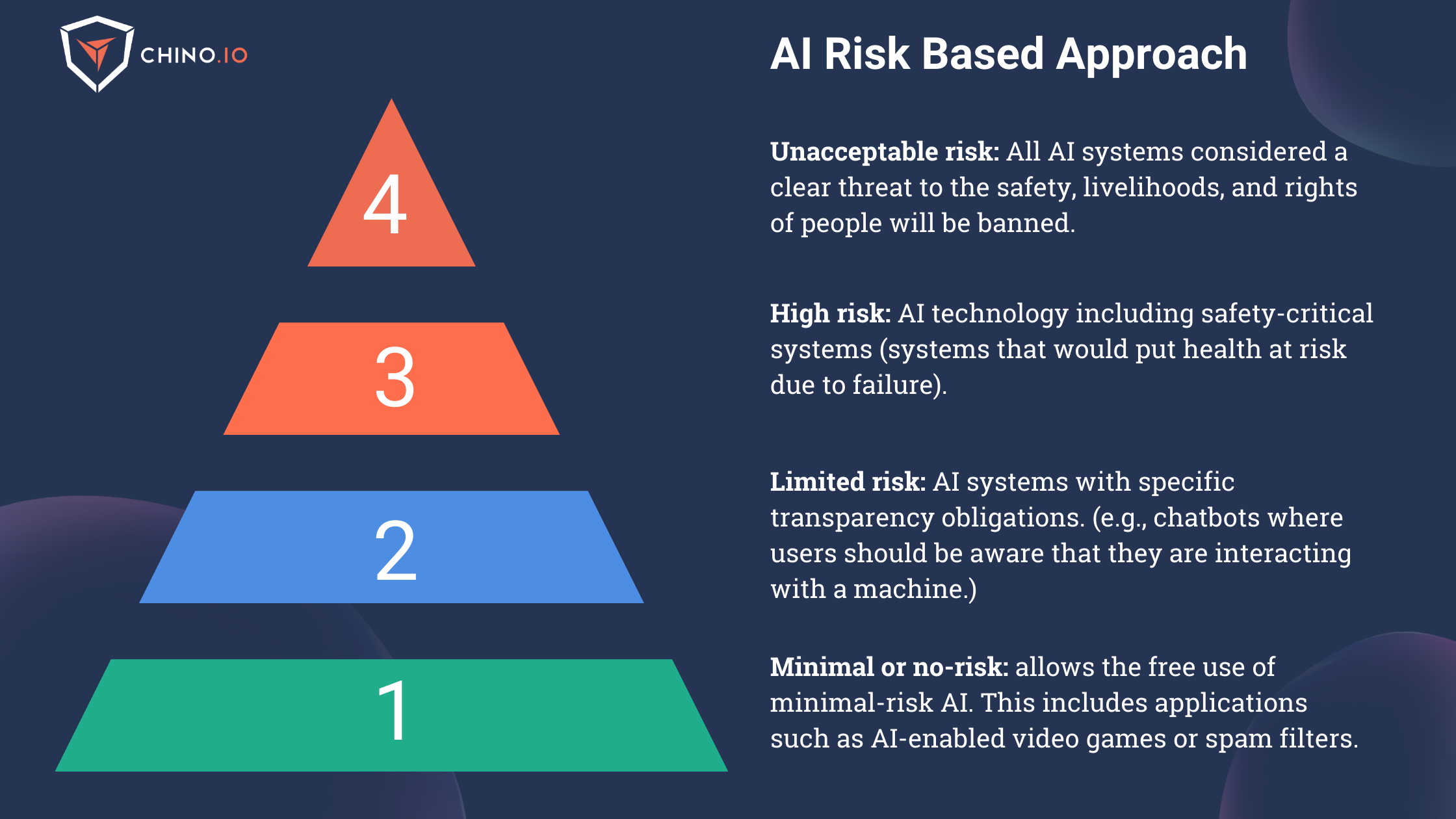

Getting AI systems compliant: a risk-based approach

The Act adopts a risk-based approach: based on how likely it is for AI systems to violate fundamental European values and rights, some AI practices will be prohibited, while others will have to stick to rigid obligations, creating different types of AI systems.

1️⃣ Unacceptable-risk AI systems: “All AI systems considered a clear threat to the safety, livelihoods, and rights of people will be banned, from social scoring by governments to toys using voice assistance that encourages dangerous behavior.”

2️⃣ High-risk AI systems: All remote biometric identification systems are considered high-risk and subject to strict requirements. This category will be subject to the most extensive requirements, including human oversight, cybersecurity, transparency, risk management, etc. Here will fall the greatest part of AI systems used in healthcare. These systems shall be designed and developed with capabilities enabling automatic logs while the high-risk AI system is operating.

3️⃣ Limited-risk AI systems: Limited risk refers to AI systems such as chatbots with specific transparency obligations. Users should be aware that they are interacting with a machine to make an informed decision to continue interacting or to step back.

4️⃣ Minimal or no-risk AI systems: The proposal allows the unrestricted use of minimal-risk AI. This includes applications such as AI-enabled video games or spam filters. Most of the AI systems currently used in the EU fall into this category.

The difference between the previous draft and the approved version of the AI Act

There are some differences between the draft version we analysed in our last article and the one approved by the EU Commission. Make sure to check it out to fully comprehend what this Act means for your business and how to overcome the challenges that may arise.

These are due to the consequences of introducing disruptive generative AI solutions like ChatGPT in the last few months of this year.

1️⃣ A new (and more detailed) definition of AI

The previous version defined an AI system as “software that is developed with one or more of the techniques and approaches listed in Annex I and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with[...]”

In the new proposed act, AI is defined as “a machine-based system that is designed to operate with varying levels of autonomy, and that can, for explicit or implicit objectives, generate outputs such as predictions, recommendations, or decisions that influence physical or virtual environments.”

The focus is now on machine learning, as opposed to automated decision-making,

2️⃣ Introduction of references about Generative AI

Initially, there were no references to general-purpose AI or generative AI.

When OpenAI took the world by storm in late November with ChatGPT, the EU Commission approved some last-minute amendments to the AI Act version to include a section on general-purpose AI systems.

It created a separate regime for general-purpose AI, exempting them from the majority of the rules in the AI Act while relying on the European Commission's implementing acts to subject specific general-purpose AI systems to the rules on high-risk AI after consultations and a detailed impact assessment.

This means that this type of AI will have to stick with strict transparency requirements such as:

✅Disclosing (in a clear and transparent way) that Artificial Intelligence generated the content.

✅Designing the AI model to prevent illegal content from being generated.

✅Publishing summaries of the copyrighted data used during the training.

3️⃣ New harm assessment for High-Risk AI

Ok, let’s draw this scenario. You are developing a generative AI chatbot and want to put it on the market.

What would you need to do to comply with the act?

⭕️ Is your AI categorised as “high-risk”? If the answer is yes, you need to undergo a conformity assessment process.

⭕️ Understand if your AI can work with anonymised or personal data.

⭕️ As an AI developer and manufacturer, you are asked to provide an informative document where you explain how your system works.

⭕️ If the results provided by your AI system are fed with data, you must provide a transparent link with your privacy policy (and thus a direct connection with the GDPR).

⭕️ You are asked to provide information about the production chain of your system (and specify who the manufacturer, distributor, and seller are). Keep in mind that, to do this, contractual connections must be established (they might need to be regulated with a Data Processing Agreement).

4️⃣ Expansion of AI prohibited use

The EU Commission decided to make additions to the list of prohibited AI practices that focus on intrusive and discriminatory uses of AI systems. Here is the updated list:

📌 Real-time remote biometric identification systems in public spaces;

📌Biometric categorisation systems using sensitive data (e.g., gender, race, ethnicity, citizenship, religion, political orientation) and post-remote biometric identification systems;

📌 Predictive AI policing systems;

📌 Emotion recognition systems in settings like law enforcement, workplace, and educational institutions;

📌 Untargeted scraping of facial images from the web or CCTV footage in order to create facial recognition databases.

5️⃣ Introduction of Ethics

Ethical principles have been embedded into this new draft. All persons within the scope of the AI Act are required to exercise their best effort to ensure AI systems achieve certain ethical principles that promote a human-centric approach to ethical and trustworthy AI.

Did you know? For all projects and activities funded by the European Union, ethics is an integral part of research from beginning to end, and ethical compliance is seen as pivotal to achieving real research excellence. We are working on ethics and compliance issues as partners in cutting-edge EU projects such as AIccelerate, Long-Covid, Ascertain, and RES-Q+.

6️⃣ Right to Complain and Receive Explanation of High-Risk AI decisions

This point has a direct link to the GDPR. If the high-risk AI makes a decision that prevents you from having a service - you (as the subject) have the right to complain and request a human check (and obtain an explanation about the criteria of the automated decision).

Users directly affected by decisions made by a high-risk AI system that causes a notable effect on their rights are entitled to ask and get an explanation of the AI system's role in the decision-making procedure, the parameters of the decision taken, and the related input data.

Want to know more about the requirements? Get a free 30-minute discovery call with our experts!

Working on Digital Therapeutics (DTx) or other AI-based services? Here is what you should keep in mind

It is undeniable that the AI draft regulation will have direct effects on med-tech and digital health companies. There may be misalignments/challenges between the MDR and the current AI draft regulation.

❌ Most AI-based medical device software would likely be classified as high-risk AI systems: Article 6 of the AI Act - coupled with Annex II (paragraph 11) - explains that all medical devices subject to a conformity assessment procedure by a Notified Body must be classified as high-risk AI systems.

❌ The AI Act establishes certification requirements that differ substantially from the procedures under MDR/IVDR (see Chapter 4, Articles 30 and subsequent.)

❌ It claims a post-market control to be carried out by two - and most of the times unconnected - supervisory authorities for medical devices relating to AI systems.

The sooner digital health companies adapt to this new regulatory reality, the better their long-term success in the market will be.

The best way of facing this new regulation today is to leverage your work on GDPR since there are quite a few areas in which they are interrelated:

✅ Perform an inventory of all AI systems: if you have a Record of Processing Activities under GDPR this should be already done.

✅ Implement proper logging in your system: Logging will be a critical aspect of AI applications, the whole article 12 describes the requirements in depth. When defining how logging should work, keep in mind that GDPR principles continue to apply! Particularly data minimization and traceability. You can check our article to know more here: https://blog.chino.io/logs-audit-trails-digital-health-apps/

✅ Be ready to demonstrate GDPR compliance: since Article 10 of the AIA is all about data governance. In particular, digital health applications are most likely processing sensitive data. Article 10 of the AIA allows for this type of data processing as long as “subject to appropriate safeguards for the fundamental rights and freedoms of natural persons, including technical limitations on the re-use and use of state-of-the-art security and privacy-preserving measures, such as pseudonymisation, or encryption where anonymisation may significantly affect the purpose pursued.” These are the GDPR requirements.

✅ Be sure to explain how your AI works in a detailed and transparent way: communicate in a transparent way how your AI works (especially if it falls under the generative AI category). You can do this using videos, infographics, providing detailed technical documentation and training.

What should you expect next?

The talks will now start with EU countries in the Council on the final form of the law. The aim is to reach an agreement by the end of this year.

As the AI Act will further evolve throughout the inter-institutional negotiations, we might see the EU institutions adopting changes responding to new technologies and new evolutions, as done with ChatGPT.

The EU Parliament, Commission, and Council will start negotiations to progress the new law to its final form, a process known as trilogue negotiations.

In the best-case scenario, the AI Act should be adopted by the start of 2024.

All companies and startups that provide, deploy, or distribute AI systems in the EU market should start to consider implementing a risk assessment process. (If you want to know more about that, check our previous article.)

Remember that the AI Act applies to all providers and companies developing, using, implementing AI systems used in the EU market irrespective of whether they are established in the EU or outside (if the output released by the AI systems is used inside the EU territory.)

Looking at how to be GDPR compliant? Chino.io, your trusted compliance partner

Working with experts can reduce time-to-market and technical debt and ensure a clear roadmap you can showcase to partners and investors (see our latest case study with Embie).

At Chino.io, we have been combining our technological and legal expertise to help hundreds of companies like yours navigate through EU and US regulatory frameworks, enabling successful launches and reimbursement approvals.

We offer tailored solutions to support you in meeting the GDPR, HIPAA, DVG, or DTAC mandated for listing your product as DTx or DiGA.

Want to know how we can help you? Reach out to us and learn more.