In the last weeks, we woke up with a piece of intriguing news: the Italian data protection authority has ordered OpenAI to stop processing people’s data locally with immediate effect.

The block decided by the Garante does not concern a specific service but only the company that provides them. So all services developed by OpenAI are involved, including both regular ChatGPT and paid plans and APIs.

What does this block mean for other companies developing AI? Is there a possibility for a major stop also for other kinds of Artificial Intelligence?

Let’s jump into it!

Why did the Italian DPA block ChatGPT?

The Garante issued an order to stop ChatGPT as the service is not compliant with the GDPR.

It all started on March 20 when a data breach was discovered. On that day, during an outage, the personal data of ChatGPT Plus subscribers were exposed, including payment-related information. The breach was caused by a bug in an open-source library, which allowed some users to see titles from another active user's chat history.

After this episode, the Italian SA started its investigation to clear some issues from a GDPR point of view.

Let's go through them together:

1️⃣ Gaps in the authentication process: The Garante argued that ChatGPT "exposes children to receiving responses that are inappropriate to their age and awareness." The platform is supposed to be for users older than 13, it noted. The GDPR claims that you must put strict control on the age verification of your users (for example, to protect children's access to potentially harmful content).

2️⃣ No proper information to the data subjects: information provided by ChatGPT does not match the actual service. OpenAI stated there was no processing of personal data via ChatGPT, yet account queries (all the questions are associated with an account - which may contain personal and sensitive information) were stored - and there is no disclaimer for that.

3️⃣ No proper legal basis to justify the collection and storage of personal data: it is unclear whether OpenAIcollects and processes personal and sensitive data in order to train the algorithm. Moreover, OpenAI does not provide a privacy policy to users or data subjects whose data is collected by OpenAI and processed through the service.

What is the impact of being blocked by a Data Protection Authority in Europe?

To draw an overview of this impact, we need to understand two main points regarding our users:

➡️ Can B2C users still use my service?

Yes, they can connect via VPN from another country, which is not illegal for the user. Regarding the case of ChatGPT, the restriction is to OpenAI. Thus, it's not illegal to access it from the user side.

On the other hand, it's illegal for OpenAI to provide the service to Italian users (and they should implement strong measures to mitigate this risk).

➡️ Can businesses use your service?

This opens a much more complicated scenario. Technically, they can use it, but if they do, they are going to open themselves up to the same fines. Using a service that is being investigated by a Data Protection Authority means that probably you're going to be fined as well if you don't fix the issues in the given time period. This is a big issue if your business model relies on B2B.

In other words, companies that want to use your service will have an increased risk when working with you.

If you're not compliant because the Data Protection Authority blocked you, why would they take a service at risk of getting fined? This may lead automatically to the conclusion that your service is dangerous for them at that moment.

What are the potential consequences?

Today, Italy’s privacy watchdog is the only authority who have blocked ChatGPT in Europe.

Joking apart, the Italian case may be the first of a series of EU-based national actions to block and prevent privacy concerns (as happened for Google Analytics last year). It should be expected that other analyses from DPAs of other EU countries may be conducted in the next few months.

After Italy's decision to block access to the chatbot, the European Consumer Organisation (BEUC) called on all authorities to investigate major AI chatbots.

Ursula Pachl, deputy director general of BEUC, warned that society “there are serious concerns growing about how ChatGPT and similar chatbots might deceive and manipulate people. These AI systems need greater public scrutiny, and public authorities must reassert control over them," Pachl said.

So, the answer may come naturally: is it possible that other EU DPAs may block ChatGPT and other AI-based services?

Well, it may be possible, as it depends on the decision of other European data protection authorities.

What can AI startups learn from this episode?

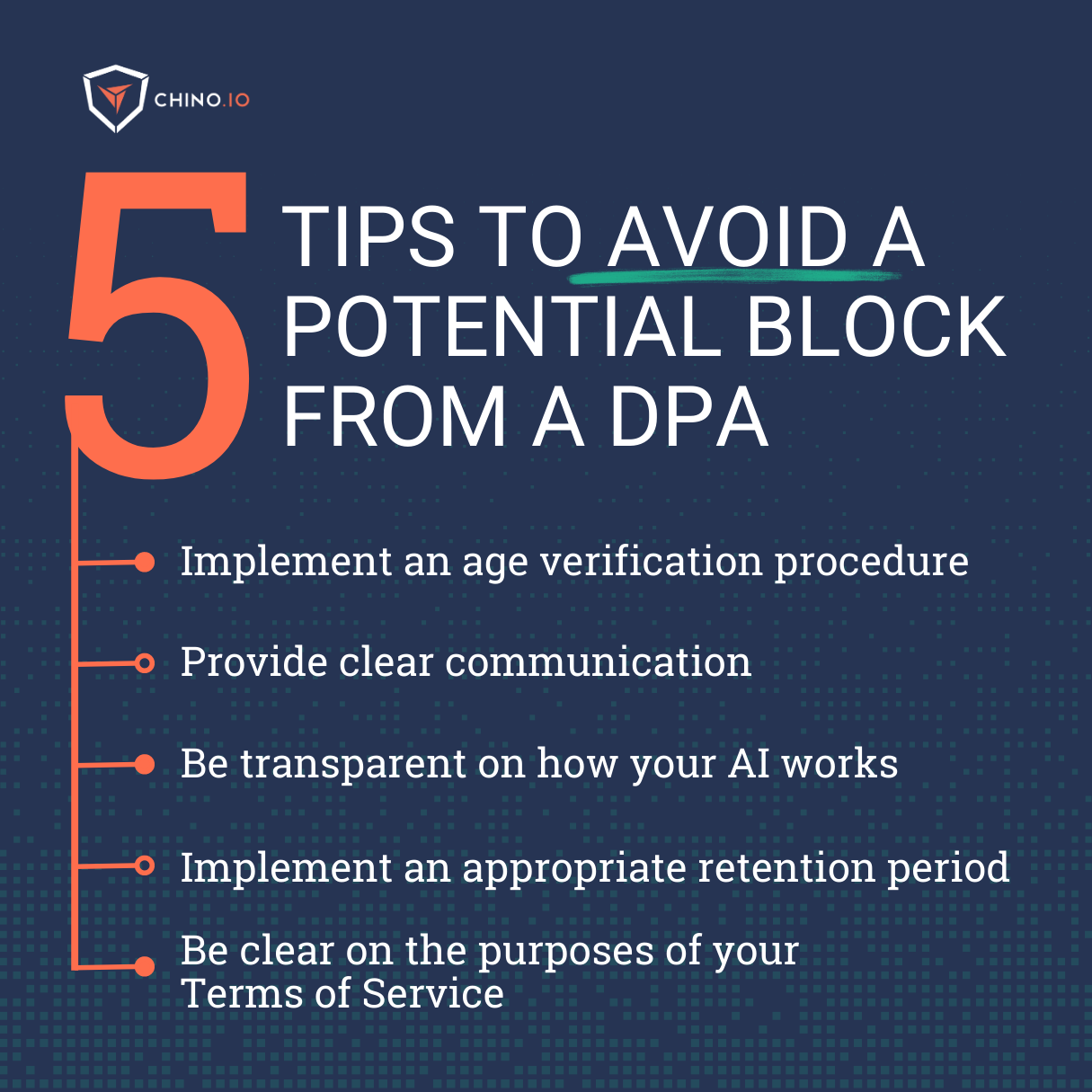

If you want to avoid a potential blocking for the same reasons OpenAI was blocked in Italy, we suggest you work on these points:

🔎 Implement an age verification procedure: be sure to have implemented all the measures to prevent children from accessing your AI-based service. You should explicitly ask for the date of birth or (in case your service can also be used by children) ask for a parent's consent. Remember that using Google Authentication does not simplify the procedure: if you are using it, keep in mind that you are transferring data to the US.

🔎 Provide clear communication: Clear privacy notice, then you need clear language and understanding of how the system works. In order to be as clear as possible (and be understood by your users). Some common questions could be: How do we train the AI? How do we use the data that users put into the system? You can by inserting icons, diagrams, or even videos. The clearer your communication is, the better outcomes you will receive.

🔎 Be transparent on how your AI works: artificial intelligence may be perceived as something mystical by those who are not close to the tech world. This is why you should provide accessible explanations of how your AI works. This is also useful to demonstrate your compliance with the users once the next AI Act comes into force.

🔎 Implement an appropriate retention period for data: make sure that you state in your privacy policy the retention of the data collected for a maximum period of time. OpenAi didn’t provide any information about the period and the criteria for determining the retention of the data. In general, you can keep the data as long as you need to achieve your purposes - the point here is that you need to justify and prove why you need them for a certain period of time.

🔎 Be clear on the purposes of your Terms of Service: here, you should be as much specific, clear, and transparent as possible about the rules that users must follow when using the service.

And remember, only collect the minimum amount of data you need. Make sure that you are collecting only the minimum amount of data necessary to train your AI.

Chino.io: your trusted compliance partner

The one-stop shop for solving all privacy and security compliance aspects.

As a partner of our clients, we combine regulatory and technical expertise with a modular IT platform that allows digital applications to eliminate compliance risks and save costs and time.

Chino.io makes compliant-by-design innovation happen faster, combining legal know-how and data security technology for innovators.

To learn more, book a call with our experts.